Setting Up Chroma V0

Chroma is my third attempt at creating a raster and ray traced renderer following my original attempt for my intermediate graphics class using JavaScript and WebGL and my more recent attempt using C++ and OpenGL. This time, I am using C++ again, now with Vulkan as the backend for my rasterized view and NVIDIA’s OptiX as the backend for my ray traced view. The goal of this project was to learn how to use modern graphics APIs like Vulkan and OptiX and to create the basis for what will hopefully be a long-standing personal renderer that I can continue to develop.

App Setup

The basic app and ImGui setup was based on The Cherno’s Walnut. I mainly borrowed his setup for for the app system, i.e., having an app with multiple layers that have their own OnAttach(), OnDetach(), OnUpdate(), and OnUIRender() functions. This architecture proved to be a really convenient way to manage having two separate renderers which have their own setup, draw, and cleanup functions. I feel like this must be a common pattern for engine development, but as a newbie, I was pleasantly surprised by how much easier this abstraction made managing the renderers.

Getting to Grips with Vulkan

For setting up Vulkan, I mainly followed vulkan-tutorial.com by Alexander Overvoorde. I think this might have been my third time following the tutorial and trying to get something up and running with Vulkan, and I think I finally have the basics down. I tried to organize the code ever so slightly better than dumping everything into a single file – though it still led to the creation of the monstrous vulkan_utils.cpp file which at the time of writing is around 1700 lines of code! To be fair, I’ve come to the understanding that a lot of the ‘bulk’ in Vulkan is initializing long, verbose structs which tend to make things a bit lengthier than you would otherwise expect. That’s not to say Vulkan is simple by any means. I think a good indicator of this was the canvas I made while trying to wrap my head around some of the concepts the tutorial covered:

In particular, check out the sub-section on uniforms showing all the structs and Vulkan calls involved in just getting uniforms passed to the shaders. Looking back at this flow chart now, I can see the exact function call I was missing (vkUpdateDescriptorSets()) which was causing some strange bugs when I was trying to set up shared materials. (Also, as a warning, I can’t gaurantee all the connections below are entirely correct! I’m still trying to figure things out.)

Once I had completed the tutorial, I just set up some basic viewport shading, trying to mimic Blender’s ‘Solid’ shading and grid lines:

Getting the Solid shading to approximately match Blender was a fun little exercise. I think they use some basic Phong shading, using the camera direction as the light direction. The shader code to produce this result was consequently pretty simple:

void main()

{

float ambient = 0.2;

float diffuse = 0.5;

float specular = 0.1;

float exponent = 16;

vec3 lightDir = normalize(v_CameraPosn - v_Position);

vec3 reflectDir = reflect(-lightDir, v_Normal);

float diffuseContrib = clamp(dot(lightDir, v_Normal), 0, 1);

float specularContrib = pow(max(dot(lightDir, reflectDir), 0.0), exponent);

float lc = ambient + diffuse * diffuseContrib + specular * specularContrib;

outColor = vec4(v_Color * vec3(lc), 1);

}

I suggest playing around with the constants at the top a bit more to see if you can get a better match. I think with the settings I have here, I was able to get a decent match on the overall brightness and shininess of Blender’s Solid shading.

Screenshots with Vulkan

In the spirit of discussing the pain that Vulkan can sometimes be to use, I think an interesting case study is the process I needed to use to implement a screen shot feature in Vulkan, especially relative to the complexity of the feature for my OptiX viewport. For OptiX, I already have a host-side vector of the pixel data that I use to present to the screen on each draw call. So, to save the render as an image, I just need to make a call to stb_write_png() using that vector’s data. When I was thinking to implement this feature, I thought the implementation for Vulkan would, likewise, be fairly straight forward…

At first, I thought that I could just directly copy the image I was rendering by copying from its corresponding VkDeviceMemory. But this kept causing the program to crash no matter how I tried to do the copy. So, I did a quick search and came across this example by Sascha Willems which I then spent the day trying to implement in my code.

Very briefly, the process for saving a screenshot expanded from simply re-orienting then saving a pre-existing image buffer to the following rough steps:

- Create a temporary capture image

- Get the current viewport image’s

VkImagehandle - Transition the image layout of the viewport image to

VK_IMAGE_LAYOUT_TRANSFER_SRC_OPTIMAL - Copy the viewport image to the temporary image

- Transition the viewport image back to

VK_IMAGE_LAYOUT_COLOR_ATTACHMENT_OPTIMAL - Transition the temporary image to

VK_IMAGE_LAYOUT_TRANSFER_SRC_OPTIMAL - Get the subresource layout of the temporary image

- Actually copy the temporary image to host using

vkMapMemory - Determine if we will need to swizzle the copied image

- Iterate through the data and store the final result in a separate buffer, removing any extra padding in the buffer and manually swizzling the rgba components if needed

- Re-orient and save the image to a file

- Clean up the temporary image

Setting Up OptiX

OptiX is NVIDIA’s hardware-accelerated ray tracing API which is a superset of their GPU compute platform, CUDA. OptiX takes care of a lot of the underlying mechanics of ray tracing such as constructing bounding volume hierarchies and performing ray-triangle intersections, at the cost of a little bit of set up. The setup mostly involves the creation of the OptiX pipeline with its associated programs and their modules, setting up the construction of the acceleration structures, and creating the Shader Binding Table (SBT) which I like to think of as similar to assigning different shader programs to different geometry in our scene.

I think its important to note that Vulkan also supports ray tracing. I had to do a little searching to figure out what the advantages of OptiX were to using Vulkan and I think this forum post summarizes things well. I settled on using OptiX since I want Chroma to be more geared towards offline rendering of complex scenes and I want to gain practice using the tool that’s more often used in professional renderers. That’s not to say I don’t want to eventually add some basic ray tracing to the Vulkan viewport for simple things like shadows from point lights, reflective surfaces, and refractive surfaces. I think that will also be a good excuse to get familiar with Vulkan’s ray tracing API.

To actually get started with OptiX, I mainly followed this OptiX 7 Course by Ingo Wald which walked me through much of the code you can find in optix_renderer.cpp. At the time of writing, the only part of that course I have not implemented yet is the denoiser, though that’s because I currently want to focus on reducing noise through other means like implementing importance sampling before I start using the denoiser too much as a crutch. I should also mention, the shader code for the course does not implement a proper path tracer. Instead, its basically a phong shader with some of the later chapters implementing soft and hard shadows with ray tracing. Therefore, after I completed the tutorial, I implemented the techniques I learned from Peter Shirley’s Ray Tracing in One Weekend series to set up the recursive ray tracer.

However, the implementation of the ray tracing this time is not actually recursive. After reading some of NVIDIA’s examples for Optix, I discovered that they instead used an iterative ray tracing approach where they updated the ray information for the next optixTrace() call in each of the closestHit functions and only called optixTrace() within the main renderFrame() function instead of calling optixTrace() in each cloesetHit function. I guess a non-recursive approach should be more memory efficient. Though, in my very limited testing of this fact, I wasn’t able to see any discernable performance difference, but its not like my scenes are too complex (yet). The iterative approach means the code to generate each sample in the renderFrame() function goes from a single optixTrace() call to the following loop:

/* Iterative (non-recursive) render loop */

while (true)

{

if (prd.depth >= optixLaunchParams.maxDepth)

{

prd.radiance *= optixLaunchParams.cutoffColor;

break;

}

optixTrace(

optixLaunchParams.traversable,

rayOrg,

rayDir,

...

);

if (prd.done) break;

/* Update ray data for next ray path segment */

rayOrg = prd.origin;

rayDir = prd.direction;

prd.depth++;

}

where prd is the per ray data struct defined as:

/* Per-ray data */

struct PRD_radiance

{

Random random; /* Random number generator and its state */

int depth; /* Recursion depth */

/* Shading state */

bool done; /* boolean allowing for early termination, e.g. if ray gets fully absorbed */

float3 radiance;

float3 origin;

float3 direction;

};

The shading state members are what get set in each closestHit function. The ray’s cumulative radiance is determined by being multiplied in each closestHit function, just like in the line prd.radiance *= optixLaunchParams.cutoffColor. For now, the done member is set if the ray misses (i.e., hits the skybox) or if it hits an emissive object like the light in a Cornell Box scene.

Current Features

General

- Per-viewport frame-rate graph

- “Free fly” and “Orbit” camera control modes

- Scene switching (for both viewports)

- Separable cameras (between the viewports), i.e., individual camera control per viewport

- Screen shot for both viewports

- Shared meshes and materials for scene objects

- Later, this should allow run-time editing of material properties for multiple instances of each material

- Arbitrary transformations for scene objects using a model matrix

- Each object calculates its own normal matrix, allowing for non-uniform scaling

Vulkan

- Perspective and orthographic projection modes

- Thin lens projection defaults to perspective projection for Vulkan

- Line rendering

- ‘Solid’ viewport shading (which mimics Blender’s Solid shading)

- ‘Flat’ viewport shading which displays diffuse textures only

- ‘Normal’ viewport shading which displays surface normals for each object

- Per-material graphics pipeline generation

- World grid lines which mimic Blender’s default world grid lines

- Runtime Vulkan SPIR-V code compilation

- Multisampled Antialiasing

- Runtime mipmap generation

OptiX

- Perspective, orthographic, and thin lens projection modes

- Lambertian (diffuse) materials with support for textures

- Conductor materials (only perfectly specular for now)

- Dielectric materials with extinction and fresnel reflectance

- Emissive material (diffuse light)

- Multiple bounce global illumination with caustics

- Though, no importance sampling or path tracing yet

- HDR environment maps

- Run-time OptiX code compilation

Short Term Plans

- Run-time material editing

- A

Lightclass to implement importance sampling and other types of lights- E.g., point, directional, spotlights, etc.

- OptiX denoiser implementation

- More & better materials

- E.g., making use of specular and normal maps, adding roughness and anisotropic reflectance for conductors

- A better scene description format (maybe use OpenUSD?)

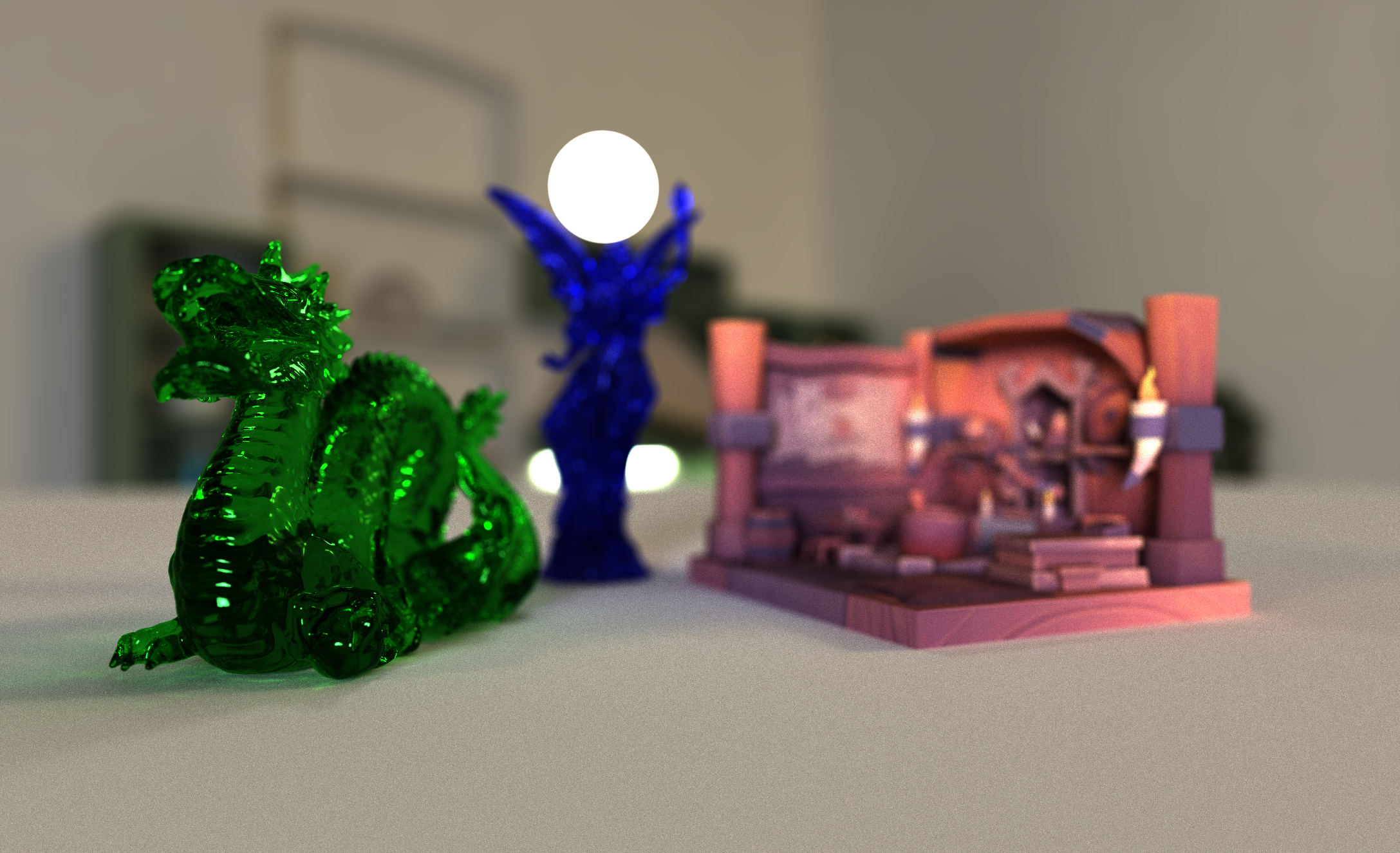

Sample Renders